Table of Contents

Introduction To Deep Learning Interview Guide

Deep learning is one of the most popular niches in the world of data science. With the rise of self-driving cars and smart speakers, companies these days are also on the lookout for data scientists who have a knack for deep learning. Here, we cover some basic questions that are maybe asked regarding

- Concepts behind deep learning

- Features that influence a neural network

- A brief explanation about some supervised learning methods (CNN and RNN)

Hope you find this guide useful!

Q) What is the difference between deep learning and machine learning?

Machine Learning is a subset of Data Science. Here, statistics and algorithms are used to train machines with data. Machine learning uses algorithms to parse and study data in order to make informed decisions.

Deep Learning is a division of Machine Learning. It structures algorithms in layers to create an “artificial neural network” that can learn and eventually make intelligent decisions on its own.

The basic idea behind Deep Learning was to mimic the human brain i.e learning through a series of neural networks. With a larger amount of data and dimensions, Deep learning is preferred over ML.

Q) What is a neural network?

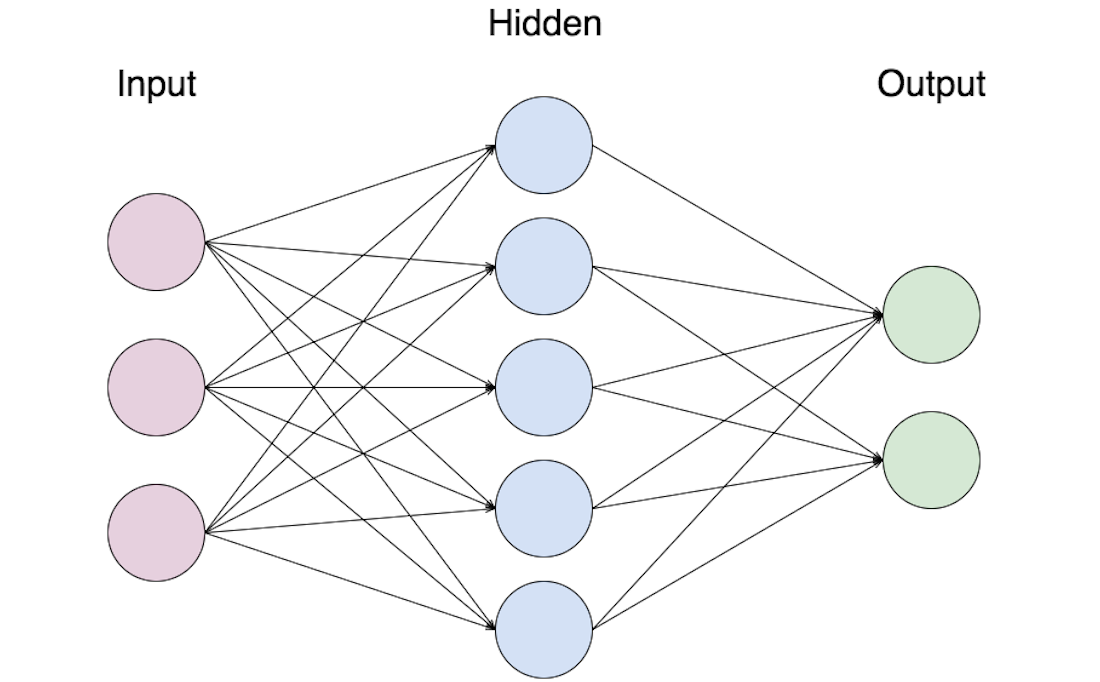

A neural network is a series of algorithms that attempts to identify underlying relationships and patterns in a large dataset. It replicates the working of a human brain. Neural networks typically consist of three layers –

- Input layer

- A hidden layer (where feature extraction takes place, tweaks are made to train faster and function better)

- Output layer

Q) What is the role of weights and bias in a neural network?

Weights and biases are the learnable parameters of a deep learning model.

Weights control the strength between two simultaneous neurons. They are associated with a feature, convey the importance of that feature in predicting the output value. For instance, if the associated weight approaches 0, it has lesser importance in the prediction process.

Biases, which are constant, are an additional input into the next layer. However, bias is not influenced by the previous layer. It is simply an additional parameter used to tune the output along with the the weights of the input neurons.

When the inputs are transmitted between immediate neurons, the weights are applied to the inputs along with the bias. It can be denoted as –

output = sum (weights * inputs) + bias

Q) What is Perceptron? What is its role?

A single-layer perceptron is the fundamental unit of a neural network. It consists of input values, weights, bias, a weighted sum, and an activation function. It is a neural network unit that does certain computations to detect features or business intelligence in the input data. They are used for supervised learning as classifiers.

A perceptron uses data points that are readily labeled. The input data goes through an iterative loop to teach machines. This loop not only iterates but also evolves every time more data is fed to the machine. Thus, a perceptron algorithm improvises its output based on its findings each time so that after a period of time, the output data is more sophisticated and accurate.

Q) Differentiate between a single-layer perceptron and a multi-layer perceptron.

| Single-layer Perceptron | Multi-layer Perceptron |

| Single layer perceptrons are used to learn from linearly separable patterns. | MLPs can classify non-linear data and offer higher performance |

| Takes in a limited amount of parameters | Can be used with wider range of parameters |

| Less efficient with large data | Highly efficient with large datasets |

Q) Explain the types of propagation in Deep Learning.

Forward propagation is the scenario where inputs are passed to the hidden layers with weights. At each layer, the output of the activation function is calculated until the next layer can be processed.

Backpropagation stands for backward propagation of errors. It is a technique to improve the performance of the network. It works backward from an error and updates the weights to reduce the error. Gradient descent is used here. The algorithm starts from the final cost function to minimize errors in each hidden layer. Weights and biases are tweaked here to decrease the overall cost function.

Q) What is the role of an activation function? Name a few types.

Activation functions incorporate non-linear properties to a network, allowing it to discover more complex functions. The main purpose of an activation function is to convert an input signal of a node to a correctly measured output signal. This output signal is then used as an input in the next layer in the network.

Some commonly used activation functions are –

- ReLU – The rectified linear activation function or ReLU for short is a piecewise linear function that will output the input directly if it is positive, otherwise, it will give an output of 0.

- Binary step – The binary step function is an activation function, which is usually based on a threshold. If the input value is above or below a particular threshold limit, the neuron is activated, then it sends the same signal to the next layer. This function does not allow multi-value outputs.

- Softmax – The softmax function is used to calculate the probability distribution of the event over ‘n’ different events.

- Sigmoid – The sigmoid activation function is also called the logistic function. It is traditionally a trendy activation function for neural networks. The input data to the function is transformed into a value between 0.0 and 1.0

- Tanh – The hyperbolic tangent function, also known as tanh for short, is a similar shaped nonlinear activation function. It provides output values between -1.0 and 1.0. It allows for better training and performance.

Q) What are hyperparameters? How are they trained to be used in a neural network?

Hyperparameters are variables used to define the formation of a neural network. They are also used to understand parameters, such as the learning rate or the number of hidden layers present in a neural network. It determines how a neural network is to be trained.

- Batch size: This is used to denote the size of the input data. It can be varied and split into sub-batches based on the requirement.

- Epochs: An epoch signifies the number of times the training data is visible for a neural network to train. The training process is iterative to achieve efficiency. Thus, the number of epochs will vary based on the data.

- Momentum: Momentum is used to understand the next consecutive steps based on the current data. It is used to avoid oscillations during training.

- Learning rate: Learning rate is used as a parameter to denote the time required for a network to update its parameters and learn.

Q) What is Gradient Descent?

Gradient descent is an optimization algorithm that is used to minimize some function by repeatedly moving in the direction of steepest descent as specified by the negative of the gradient.

Gradient descent is calculated in the following manner –

- Initialize biases and weights for a network

- Transmit input data through the network (the input layer)

- Determine the difference (the error) between expected and predicted values

- Modify values in neurons to minimize the loss function

- Perform multiple iterations to determine the best weights for efficient working

Q) Explain the variants of Gradient Descent.

| Stochastic Gradient Descent | Batch Gradient Descent | Mini-batch Gradient Descent |

| The stochastic gradient computes the gradient using a single sample. It is calculated faster since weights are updated more often. | The batch gradient computes the gradient using the entire dataset. Since the volume of data is huge, and weights update slowly, it takes time to compute. | Mini-batch gradient descent is a variation of stochastic gradient descent. Instead of a single training example, a mini-batch of samples is used. This is the most widely used gradient descent variant. |

Q) What is CNN? Explain the key layers behind it.

A convolutional neural network, popularly called CNN, is a feedforward neural network (forward propagation). It uses convolution in at least one of the neural network layers. The convolutional layer contains a set of filters (kernels) stated as below –

- Convolutional Layer – This layer performs the convolutional operation, creating several smaller picture windows to go over the data.

- ReLU Layer – It corrects data by bringing non-linearity to the network and converts all the negative pixels to zero. The output is a rectified feature map.

- Pooling Layer – It is a down-sampling operation that reduces the dimensionality of the generated feature map.

- Fully Connected Layer – This final layer recognizes and classifies the objects in the image.

Q) What is Overfitting and Underfitting of a model? How can it be avoided?

Overfitting occurs when the model learns even the noise in training data. When a model is too overfit (i.e it trains using more noise than useful data), it adversely impacts its execution on new information. It is more likely to occur with nonlinear models that have more flexibility. Overfitting is one of the most commonly seen issues in deep learning. It can be detected when a model shows high variance and low bias.

Underfitting occurs in a model that is neither well-trained on data nor can conclude on new data. This usually happens when there is limited and unreliable data to train a model. In the case of underfitting, the model does not learn adequately and is incapable of predicting the right results. It results in poor performance and accuracy. Underfitting can be prevented by using more training data, adding dropouts, reducing the capacity of networks, and tuning the weights.

Q) What is RNN?

Recurrent Neural Networks (RNN) are neural networks that utilize the output from the preceding step and uses it as an input for the current step. In a recurrent neural network, the preceding outputs are crucial to deciding the next. It features a hidden layer that carries data in a sequence. This carried data can be used for training using the backpropagation algorithm. This type of ANN is said to be highly precise.

Q) What are the issues that concern implementation of RNNs?

- Vanishing Gradient

When we perform Back-propagation, the gradients become smaller as we keep on moving backward layer by layer. As a result, the neurons in the earlier layer learn rather slower compared to the latter layers. But input layers are more valuable because they the ones that are effective in learning and detecting simple patterns. They are the building blocks of the neural network. Therefore the more time the training procedure takes, the more the prediction accuracy of the model decreases due to vanishing gradient - Exploding Gradient

Exploding gradients are caused when huge error gradients accumulate. They provide output in very large updates to neural network model weights during training. Gradient Descent process only works best when updates and weights are small and controlled. When the magnitudes of the gradient are too large, the network becomes too unstable. It can cause poorer accuracy of results or even a badly trained model.

Q) What is cost function and model capacity?

The cost function determines how well the neural network is performing with respect to the given training sample and the expected output. It largely depends on variables such as weights and biases. Additionally, it provides the performance of a neural network as a whole. In deep learning, our aim is to minimize the cost function. That’s why we prefer to use the concept of gradient descent which ensures just that

The model capacity of a deep learning neural network controls the scope of the types of mapping functions that it can learn. Higher model capacity signifies that the model can store and use more information to train.

Q) What are some supervised and unsupervised learning algorithms in Deep Learning?

| Supervised learning algorithms | Unsupervised learning algorithms |

| > Traditional neural networks/ Artificial Neural Network (ANN) > Convolution neural network (CNN) > Recurrent neural network (RNN) | > Self Organizing Maps > Deep belief networks (Boltzmann Machine) > Auto Encoders |

So this sums up a basic questionnaire of the kinds of questions asked in a typical deep learning interview. Apart from this you need to learn about some important frameworks like TensorFlow, Keras and PyTorch. You must be aware about their features and at least have some basic projects in your portfolio implemented using these frameworks. You can also amplify your knowledge by learning about the unsupervised learning algorithms in deep learning.